Cloudsourced

Pure Salon & Spa Koramangla, Bangalore

Pure Salon & Spa Koramangla, Bangalore

Led by veteran hair stylist Pure Salon & Spa offers various services from the simple hair cut and styling for women, men, kids, and brides, to vibrant hair colours, conditioning treatments, and Brazilian blowouts. Located at the heart of Koramangla, Bangalore, the salon has been around since 2015, providing professional hair treatments in a relaxed ambience. This is definitely one of our favourite hair salons in Bangalore.

German Stock Exchange to Offer Trading in Cloud Computing

By Ulrike Dauer

Cloud computing, using servers such as these shown in Germany, is an opaque market and contracts are hard to exit. In their quest for new revenue sources, exchange operators have consistently looked for new things to trade.

Now Deutsche Börse, the operator of the Frankfurt stock exchange and Eurex derivatives exchange, is to start trading in spare cloud computing capacity.

Starting in the first quarter of next year, buyers and sellers of at least one terabyte in cloud-computing dataspace – the size of your average external home hard drive – will be able to match supply and demand via a new platform run by the exchange, with real-time prices to boot.

The cloud, which essentially stores data away from a physical computer, instead making it available on demand from a remote server, comes as more companies consider storing their data that way.

IT advisory firm International Data Corp. forecasts annual growth rates of up to 40% in Europe’s cloud infrastructure business market over the next seven years as it tries to catch up with developments in the U.S. and Asia.

Deutsche Börse’s new Cloud Exchange AG joint venture with Zimory, a Berlin-based software developer that does not provide cloud capacity, hopes to be a “catalyst” for that growth, said management board members Maximilian Ahrens and Michael Osterloh. Deutsche Börse, which traditionally has marketplaces for stocks, bonds and derivatives, already gained some experience of a new market when it launched the EEX European Energy Exchange

At the moment, pricing of cloud services remains opaque because only buyers and sellers are involved in the transaction.

Several commercial cloud marketplaces exist, such as Spot Instances and Reserved Instance Marketplace by Amazon Web Services and SpotCloud by Virtustream, but these are affiliated with the vendor.

Clients and potential clients of cloud computing services also complain about the lengthy procedures required to close an individual cloud contract which can take more than a year until capacity can easily be sold on.

Deutsche Börse hopes to speed up the process substantially by offering standardized products and procedures for admission, trading, settlement and surveillance via the new technical platform, for which it will get a fee.

Buyers of cloud capacity can choose the location and jurisdiction of the servers and how long they want to rent the cloud capacity. They can also migrate between vendors, choose the safety level they want for their data, disaster recovery measures, and data speed.

A group of up to 20 “early adapters” –which includes IT companies, German and international companies such as in the aviation and automobile sectors– is currently working on the details to ensure the marketplace can go live with enough liquidity early next year.

Spot trading of cloud capacity against payment in cash is expected to start in the first quarter. The launch of derivatives trading – with a time gap between trading and delivery – is envisaged for 2015.

The exchange didn’t disclose start-up costs for the new joint venture, which aims to be profitable within the next three years.

The move has certainly piqued interest elsewhere, too. On a trip to Frankfurt Monday, the chief executive of the Zagreb Stock Exchange, Ivana Gazic, said the cloud-computing business “is definitely the future” and would be a perfect secondary use for its own system, though the Croatian exchange is not looking at it at the moment.

Cloud computing, using servers such as these shown in Germany, is an opaque market and contracts are hard to exit. In their quest for new revenue sources, exchange operators have consistently looked for new things to trade.

Now Deutsche Börse, the operator of the Frankfurt stock exchange and Eurex derivatives exchange, is to start trading in spare cloud computing capacity.

Starting in the first quarter of next year, buyers and sellers of at least one terabyte in cloud-computing dataspace – the size of your average external home hard drive – will be able to match supply and demand via a new platform run by the exchange, with real-time prices to boot.

The cloud, which essentially stores data away from a physical computer, instead making it available on demand from a remote server, comes as more companies consider storing their data that way.

IT advisory firm International Data Corp. forecasts annual growth rates of up to 40% in Europe’s cloud infrastructure business market over the next seven years as it tries to catch up with developments in the U.S. and Asia.

Deutsche Börse’s new Cloud Exchange AG joint venture with Zimory, a Berlin-based software developer that does not provide cloud capacity, hopes to be a “catalyst” for that growth, said management board members Maximilian Ahrens and Michael Osterloh. Deutsche Börse, which traditionally has marketplaces for stocks, bonds and derivatives, already gained some experience of a new market when it launched the EEX European Energy Exchange

At the moment, pricing of cloud services remains opaque because only buyers and sellers are involved in the transaction.

Several commercial cloud marketplaces exist, such as Spot Instances and Reserved Instance Marketplace by Amazon Web Services and SpotCloud by Virtustream, but these are affiliated with the vendor.

Clients and potential clients of cloud computing services also complain about the lengthy procedures required to close an individual cloud contract which can take more than a year until capacity can easily be sold on.

Deutsche Börse hopes to speed up the process substantially by offering standardized products and procedures for admission, trading, settlement and surveillance via the new technical platform, for which it will get a fee.

Buyers of cloud capacity can choose the location and jurisdiction of the servers and how long they want to rent the cloud capacity. They can also migrate between vendors, choose the safety level they want for their data, disaster recovery measures, and data speed.

A group of up to 20 “early adapters” –which includes IT companies, German and international companies such as in the aviation and automobile sectors– is currently working on the details to ensure the marketplace can go live with enough liquidity early next year.

Spot trading of cloud capacity against payment in cash is expected to start in the first quarter. The launch of derivatives trading – with a time gap between trading and delivery – is envisaged for 2015.

The exchange didn’t disclose start-up costs for the new joint venture, which aims to be profitable within the next three years.

The move has certainly piqued interest elsewhere, too. On a trip to Frankfurt Monday, the chief executive of the Zagreb Stock Exchange, Ivana Gazic, said the cloud-computing business “is definitely the future” and would be a perfect secondary use for its own system, though the Croatian exchange is not looking at it at the moment.

150,000 cloud virtual machines will help solve mysteries of the Universe

OpenStack, Puppet used to build cloud for world's largest particle accelerator.

CERN data center equipment in Geneva.

That's why CERN, the European Organization for Nuclear Research, opened a new data center and is building a cloud network for scientists conducting experiments using data from the Large Hadron Collider at the Franco-Swiss border.

CERN's pre-existing data center in Geneva, Switzerland, is limited to about 3.5 megawatts of power. "We can't get any more electricity onto the site because the CERN accelerator itself needs about 120 megawatts," Tim Bell, CERN's infrastructure manager, told Ars.

The solution was to open an additional data center in Budapest, Hungary, which has another 2.7 megawatts of power. The data center came online in January and has about 700 "white box" servers to start with. Eventually, the Budapest site will have at least 5,000 servers in addition to 11,000 servers in Geneva. By 2015, Bell expects to have about 150,000 virtual machines running on those 16,000 physical servers.

But the extra computing power and megawatts of electricity aren't as important as how CERN will use its new capacity. CERN plans to move just about everything onto OpenStack, an open source platform for creating infrastructure-as-a-service cloud networks similar to the Amazon Elastic Compute Cloud.

Automating the data center

OpenStack pools compute, storage, and networking equipment together, allowing all of a data center's resources to be managed and provisioned from a single point. Scientists will be able to request whatever amount of CPU, memory, and storage space they need. They will also be able to get a virtual machine with the requested amounts within 15 minutes. CERN runs OpenStack on top of Scientific Linux and uses it in combination with Puppet IT automation software.

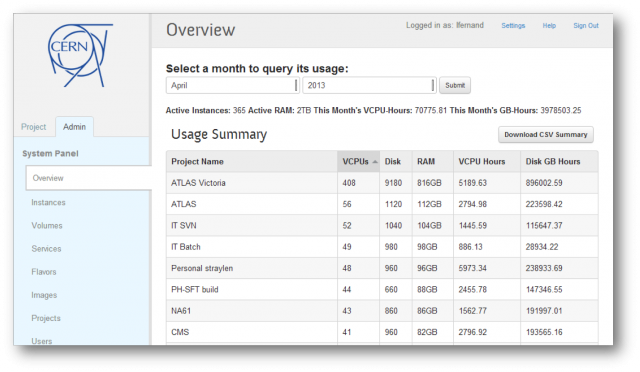

Enlarge / CERN dashboard for managing OpenStack resources.

CERN

CERN is deploying the OpenStack cloud this month, and it has already been in use by pilot testers with 250 new VMs being created each day. By 2015 Bell hopes to have all of CERN's computing resources running on OpenStack. About 90 percent of servers would be virtualized but even the remaining 10 percent would be managed with OpenStack.

"For the remaining 10 percent we're looking at using OpenStack to manage the bare metal provisioning," Bell said. "To have a single overriding orchestration layer helps us greatly when we're looking at accounting, because we're able to tell which projects are using which resources in a single place rather than having islands of resources."

CERN began virtualizing servers with the KVM and Hyper-V hypervisors a few years ago, deploying Linux on KVM and Windows on Hyper-V. Virtualization improved things over the old days when scientists would have to wait months to procure a new physical server if they needed an unusual configuration. But even with virtualization and Microsoft's System Center Virtual Machine Manager software, things were somewhat limited.

Scientists had a Web interface to request virtual machines, but "we were only offering four different configurations," Bell said. With OpenStack, scientists will be able to ask for whatever combination of resources they'd like, and they can upload their own operating system images to the cloud-based virtual machines.

"We provide standard Windows and Scientific Linux images," Bell said. Those OS images are pre-loaded on cloud VMs and supported by the IT department. Scientists can load other operating systems, but they do so without official support from the IT department.

Moreover, OpenStack will make it easier to scale up to those aforementioned 150,000 virtual machines and manage them all.

CERN relies on three OpenStack programs. These are Nova, which builds the infrastructure-as-a-service system while providing fault-tolerance and API compatibility with Amazon EC2 and other clouds; Keystone, an identity and authentication service; and Glance, designed for "discovering, registering, and retrieving virtual machine images."

CERN uses Puppet to configure virtual machines and the aforementioned components. "This allows easy configuration of new instances such as Web servers using standard recipes from Puppet Forge," a repository of modules built by the Puppet open source community, Bell said.

There is still some manual work each time a virtual machine is allocated, but CERN plans to further automate that process later on using OpenStack's Heat orchestration software. All of this will help in deploying different operating system images to different people and help CERN upgrade users from Scientific Linux 5 to Scientific Linux 6. (Scientific Linux is built from the Red Hat Enterprise Linux source code and tailored to scientific users.)

"In the past we would have to reinstall all of our physical machines with the right version of the operating system, whereas now we're able to dynamically provision the operating system that people want as they ask for it," Bell said.

Keeping some things separate from OpenStack

CERN's OpenStack deployment required some customization to interface with a legacy network management system and to work with CERN's Active Directory setup, which has directory information on 44,000 users. Although CERN intends to put all of its servers under OpenStack there are other parts of the computing infrastructure that will remain separate.CERN has block storage set aside to go along with OpenStack virtual machine instances, but the bulk storage where it has most of its physics data is managed by a CERN-built system called Castor. This includes 95 petabytes of data on tape for long-term storage, in addition to disk storage.

CERN experiments produce a petabyte of data a second, which is filtered down to about 25GB per second to make it more manageable. (About 6,000 servers are used to perform this filtering—although these servers are in addition to those managed by the IT department, they too will be devoted to OpenStack during the two years in which the Large Hadron Collider is shut down for upgrades.)

CERN is sticking with Castor instead of moving to OpenStack's storage management system. "We are conservative in this area due to the large volumes and efforts to migrate to a new technology," Bell noted. The migration is smoother on the compute side, since OpenStack builds on existing virtualization technology. Bell said CERN's OpenStack cloud is being deployed by just three IT employees.

"Having set up the basic configuration templates [with Puppet], we're now able to deploy hypervisors at a fairly fast rate. I think our record is about 100 hypervisors in a week," Bell said. "Finding a solution that would run at that scale [150,000 virtual machines] was something a few years ago we hadn't conceived of, until OpenStack came along."

EMC to train 30,000 in cloud computing

Mumbai, Nov 9 — EMC Data Storage Systems India, a subsidiary of US-based EMC Corporation, plans to train around 30,000 people in cloud computing, data science and big data analytics by 2013 through its new course, a top company official said Thursday.

Rajesh Janey, president, EMC India and SAARC, told IANS that the Indian cloud computing market (use of computing resources delivered as a service over the internet), currently estimated at $4 billion, was likely to touch $4.5 billion and the business opportunity in big data (huge data difficult to process with existing tools) is expected to touch $300 million in a couple of years.

"However, there is a huge shortage of manpower in these domains," he said.

According to Janey, the new certification courses will be a part of EMC Academic Alliance which has been implemented by tying up with over 250 educational institutes in India and to EMC's customers and partners.

Janey said that in the digital era, individual need and sentiment had become more prevalent and businesses and governments had the opportunity to understand this on a mass scale in real time and take necessary steps that would transform services and delivery.

"Cloud computing is transforming IT and big data is transforming business. But there is shortage of people with requisite skills. It is estimated globally that there is a shortage of around 192,000 data scientists," Janey said.

He said the new data science and big data analytics training and certification helped build a solid foundation for data analytics with focus on opportunities and challenges presented by big data.

The new Cloud Infrastructure and Services Associate-Level Training and Certification provides essential foundation of technical principles and concepts to enable IT professionals to together make informed business and technical decisions on migration to the cloud.

Citing EMC Zinnov study Janey said digital information is creating new opportunities in cloud computing and data.

Janey said the cloud opportunity in India is expected to create 100,000 jobs by 2015 from 10,000 in 2011.

Apart from addressing the domestic big data market need, India has the opportunity to address the global market expected to touch $25 billion.

India's potential to tap this market is around $1 billion by 2015 at a compounded annual growth rate (CAGR) of 83 percent, Janey said citing a NASSCOM CRISIL study.

Rajesh Janey, president, EMC India and SAARC, told IANS that the Indian cloud computing market (use of computing resources delivered as a service over the internet), currently estimated at $4 billion, was likely to touch $4.5 billion and the business opportunity in big data (huge data difficult to process with existing tools) is expected to touch $300 million in a couple of years.

"However, there is a huge shortage of manpower in these domains," he said.

According to Janey, the new certification courses will be a part of EMC Academic Alliance which has been implemented by tying up with over 250 educational institutes in India and to EMC's customers and partners.

Janey said that in the digital era, individual need and sentiment had become more prevalent and businesses and governments had the opportunity to understand this on a mass scale in real time and take necessary steps that would transform services and delivery.

"Cloud computing is transforming IT and big data is transforming business. But there is shortage of people with requisite skills. It is estimated globally that there is a shortage of around 192,000 data scientists," Janey said.

He said the new data science and big data analytics training and certification helped build a solid foundation for data analytics with focus on opportunities and challenges presented by big data.

The new Cloud Infrastructure and Services Associate-Level Training and Certification provides essential foundation of technical principles and concepts to enable IT professionals to together make informed business and technical decisions on migration to the cloud.

Citing EMC Zinnov study Janey said digital information is creating new opportunities in cloud computing and data.

Janey said the cloud opportunity in India is expected to create 100,000 jobs by 2015 from 10,000 in 2011.

Apart from addressing the domestic big data market need, India has the opportunity to address the global market expected to touch $25 billion.

India's potential to tap this market is around $1 billion by 2015 at a compounded annual growth rate (CAGR) of 83 percent, Janey said citing a NASSCOM CRISIL study.

Rackspace versus Amazon: The big data edition

By Derrick Harris

Rackspace is busy building a Hadoop service, giving the company one more avenue to compete with cloud kingpin Amazon Web Services. However, the two services — along with several others on the market — highlight just how different seemingly similar cloud services can be.

Rackspace has been on a tear over the past few months releasing new features that map closely to the core features of the Amazon Web Services platform, only with a Rackspace flavor that favors service over scale. Its next target is Amazon Elastic MapReduce, which Rackspace will be countering with its own Hadoop service in 2013. If AWS and Rackspace are, indeed, the No. 1 and No. 2 cloud computing providers around, it might be easy enough to make a decision between the two platforms.

In the cloud, however, the choices are never as simple as black or white.

For Hadoop specifically, Engates said Rackspace’s service will “really put [users] in the driver’s seat in terms of how they’re running it” by giving them granular control over how their systems are configured and how their jobs run (courtesy of the OpenStack APIs, of course). Rackspace is even working on optimizing a portion of its cloud so the Hadoop service will run on servers, storage and networking gear designed specifically for big data workloads. Essentially, Engates added, Rackspace wants to give users the experience of owning a Hadoop cluster without actually owning any of the hardware.

“It’s not MapReduce as a service,” he added, “it’s more Hadoop as a service.”

The company partnered with Yahoo spinoff Hortonworks on this in part because of its expertise and in part because its open source vision for Hadoop aligns closely with Rackspace’s vision around OpenStack. “The guys at Hortonworks are really committed to the real open source flavor of Hadoop,” Engates said.

Rackspace’s forthcoming Hadoop service appears to contrast somewhat with Amazon’s three-year-old and generally well-received Elastic MapReduce service. The latter lets users write their own MapReduce jobs and choose the number and types of servers they want, but doesn’t give users system-level control on par with what Rackspace seems to be planning. For the most part, it comports with AWS’s tried-and-true strategy of giving users some control of their underlying resources, but generally trying to offload as much of the operational burden as possible.

Elastic MapReduce also isn’t open source, but is an Amazon-specific service designed around Amazon’s existing S3 storage system and other AWS features. When AWS did choose to offer a version of Elastic MapReduce running a commercial Hadoop distribution, it chose MapR’s high-performance but partially proprietary flavor of Hadoop.

Rackspace needs stuff that’s “open, readily available and not unique to us,” Engates said. Pointing specifically to AWS’s fully managed and internally developed DynamoDB service, he suggested, “I don’t think it’s in the fairway for most customers that are using Amazon today.”

Perhaps, but early DynamoDB success stories such as IMDb, SmugMug and Tapjoy suggest the service isn’t without an audience willing to pay for its promise of a high-performance, low-touch NoSQL data store.

The NoSQL space is rife with cloud services, too, primarily focused on MongoDB but also including hosted Cassandra and CouchDB-based services.

In order to stand apart from the big data crowd, Engates said Rackspace is going to stick with its company-wide strategy of differentiation through user support. Thanks to its partnership with Hortonworks and the hybrid nature of OpenStack, for example, Rackspace is already helping customers deploy Hadoop in their private cloud environments while its public cloud service is still in the works. “We want to go where the complexity is,” he said, “where the customers value our [support] and expertise.”

Rackspace is busy building a Hadoop service, giving the company one more avenue to compete with cloud kingpin Amazon Web Services. However, the two services — along with several others on the market — highlight just how different seemingly similar cloud services can be.

Rackspace has been on a tear over the past few months releasing new features that map closely to the core features of the Amazon Web Services platform, only with a Rackspace flavor that favors service over scale. Its next target is Amazon Elastic MapReduce, which Rackspace will be countering with its own Hadoop service in 2013. If AWS and Rackspace are, indeed, the No. 1 and No. 2 cloud computing providers around, it might be easy enough to make a decision between the two platforms.

In the cloud, however, the choices are never as simple as black or white.

Amazon versus Rackspace is a matter of control

Discussing its forthcoming Hadoop service during a phone call on Friday, Rackspace CTO John Engates highlighted the fundamental product-level differences between his company and its biggest competitor, AWS. Right now, for users, it’s primarily a question of how much control they want over the systems they’re renting — and Rackspace comes down firmly on the side of maximum control.For Hadoop specifically, Engates said Rackspace’s service will “really put [users] in the driver’s seat in terms of how they’re running it” by giving them granular control over how their systems are configured and how their jobs run (courtesy of the OpenStack APIs, of course). Rackspace is even working on optimizing a portion of its cloud so the Hadoop service will run on servers, storage and networking gear designed specifically for big data workloads. Essentially, Engates added, Rackspace wants to give users the experience of owning a Hadoop cluster without actually owning any of the hardware.

“It’s not MapReduce as a service,” he added, “it’s more Hadoop as a service.”

The company partnered with Yahoo spinoff Hortonworks on this in part because of its expertise and in part because its open source vision for Hadoop aligns closely with Rackspace’s vision around OpenStack. “The guys at Hortonworks are really committed to the real open source flavor of Hadoop,” Engates said.

Rackspace’s forthcoming Hadoop service appears to contrast somewhat with Amazon’s three-year-old and generally well-received Elastic MapReduce service. The latter lets users write their own MapReduce jobs and choose the number and types of servers they want, but doesn’t give users system-level control on par with what Rackspace seems to be planning. For the most part, it comports with AWS’s tried-and-true strategy of giving users some control of their underlying resources, but generally trying to offload as much of the operational burden as possible.

Elastic MapReduce also isn’t open source, but is an Amazon-specific service designed around Amazon’s existing S3 storage system and other AWS features. When AWS did choose to offer a version of Elastic MapReduce running a commercial Hadoop distribution, it chose MapR’s high-performance but partially proprietary flavor of Hadoop.

It doesn’t stop with Hadoop

Rackspace is also considering getting into the NoSQL space, perhaps with hosted versions of the open source Cassandra and MongoDB databases, and here too it likely will take a different tact than AWS. For one, Rackspace still has a dedicated hosting business to tie into, where some customers still run EMC storage area networks and NetApp network-attached storage arrays. That means Rackspace can’t afford to lock users into a custom-built service that doesn’t take their existing infrastructure into account or that favors raw performance over enterprise-class features.Rackspace needs stuff that’s “open, readily available and not unique to us,” Engates said. Pointing specifically to AWS’s fully managed and internally developed DynamoDB service, he suggested, “I don’t think it’s in the fairway for most customers that are using Amazon today.”

Perhaps, but early DynamoDB success stories such as IMDb, SmugMug and Tapjoy suggest the service isn’t without an audience willing to pay for its promise of a high-performance, low-touch NoSQL data store.

Which is better? Maybe neither

There’s plenty of room for debate over whose approach is better, but the answer for many would-be customers might well be neither. When it comes to hosted Hadoop services, both Rackspace and Amazon have to contend with Microsoft’s newly available HDInsight service on its Windows Azure platform, as well as IBM’s BigInsights service on its SmartCloud platform. Google appears to have something cooking in the Hadoop department, as well. For developers who think all these infrastructure-level services are too much work, higher-level services such as Qubole, Infochimps or Mortar Data might look more appealing.The NoSQL space is rife with cloud services, too, primarily focused on MongoDB but also including hosted Cassandra and CouchDB-based services.

In order to stand apart from the big data crowd, Engates said Rackspace is going to stick with its company-wide strategy of differentiation through user support. Thanks to its partnership with Hortonworks and the hybrid nature of OpenStack, for example, Rackspace is already helping customers deploy Hadoop in their private cloud environments while its public cloud service is still in the works. “We want to go where the complexity is,” he said, “where the customers value our [support] and expertise.”

Gartner sees cloud computing, mobile development putting IT on edge

By Jack Vaughan

ORLANDO, Fla. -- A variety of mostly consumer-driven forces challenge enterprise IT and application development today. Cloud computing, Web information, mobile devices and social media innovations are converging to dramatically change modern organizations and their central IT shops. Industry analyst group Gartner Inc. describes these forces as forming a nexus, a connected group of phenomena, which is highly disruptive.

As in earlier shifts to client-server computing and Web-based e-commerce, the mission of IT is likely to come under serious scrutiny. How IT should adjust was a prime topic at this week's Gartner ITxpo conference held in Orlando, Fla.

"A number of things are funneling together to cause major disruption to your environment," David Cearley, vice president and Gartner fellow, told CIOs and other technology leaders among the more than 10,000 assembled at the Orlando conference.

"Mobile has outweighed impact in terms of disruption. The mobile experience is eclipsing the desktop experience," said Cearley, who cited the large number of mobile device types as a challenge to IT shops that had over the years achieved a level of uniformity via a few browsers and PC types. "You need to prepare for heterogeneity," he told the audience.

Crucially, mobile devices coupled with cloud computing can change that basic architecture of modern corporate computing.

"You need to think about 'cloud-client' architecture, instead of client-server," Cearley said. "The cloud becomes your control point for what lives down in the client. Complex applications won't necessarily work in these mobile [settings]."

This could mean significant changes in skill sets required for enterprise application development. The cloud-client architecture will call for better design skills for front-end input. Development teams will have to make tradeoffs between use of native mobile device OSes and HTML5 Web browser alternatives, according to Cearley. The fact that these devices are brought into the organization by end users acting as consumers also is a factor.

"The user experience is changing. Users have new expectations," he said. For application architects and developers, he said, there are "new design skills required around how applications communicate and work with each other."

The consequences are complex. For example, software development and testing is increasingly not simply about whether software works or not, according to one person on hand at Gartner ITxpo. "We get great pressures to improve the quality of service we provide. It's not just the software, it's how the customer interacts with the software," said Dave Miller, vice president of software quality assurance at FedEx Services.

Cloud computing and mobile apps on the horizon

Shifts in software architecture, such as those seen today in cloud and mobile applications, have precedence, said Miko Matsumura, senior vice president of platform marketing and developer relations at Mateo, Calif.-based mobile services firm Kii Inc. In the past, there have been "impedance mismatches," for example, in the match between object software architecture and relational data architecture, he noted.

The effect of mobile development means conventional architecture is evolving. "What happens is you see the breakdown of the programming model," said Matsumura, a longtime SOA thought leader whose firm markets a type of mobile back end as a service offering. "Now we have important new distinctions. A whole class of developers treat mobile from the perspective of cloud."

"Now, if you are a mobile developer, you don't need to think about the cloud differently than you think of your mobile client," he said. With "client cloud," he continued, "it's not a different programming model, programming language or programming platform." That is a different situation than we find in most development shops today, where Java or .NET teams and JavaScript teams work with different models, languages and platforms.

The changes in application development and application lifecycles are telling, according to Gartner's Jim Duggan, vice president for research. "Mobile devices and cloud -- a lot of these things challenge conventional development," he said. He said Gartner estimates that by 2015 mobile applications will outnumber those for static deployment by four to one. This will mean that IT will have to spend more on training of developers, and will likely do more outsourcing as well.

"Deciding which [are the skills that] you need to have internally is going to change the way your shop evolves," he said.

Look out; the chief digital officer is coming

Underlying the tsunami of disruptive consumerist forces Gartner sees is a wholesale digitization of commerce. At the conference Gartner analysts said such digitization of business segments will lead to a day when every budget becomes an IT budget. Firms will begin to create the role of chief digital officer as part of the business unit leadership, Gartner boldly predicted, estimating 25% of organizations will have chief digital officers in place by 2015.

The chief digital officer title may grow, according to Kii's Matsumura. Often today, marketing departments are controlling the mobile and social media agendas within companies, he said.

"We are seeing a convergence point between the human side of the business -- in the form of marketing -- and the 'machine-side,' in the form of IT," he said.

ORLANDO, Fla. -- A variety of mostly consumer-driven forces challenge enterprise IT and application development today. Cloud computing, Web information, mobile devices and social media innovations are converging to dramatically change modern organizations and their central IT shops. Industry analyst group Gartner Inc. describes these forces as forming a nexus, a connected group of phenomena, which is highly disruptive.

As in earlier shifts to client-server computing and Web-based e-commerce, the mission of IT is likely to come under serious scrutiny. How IT should adjust was a prime topic at this week's Gartner ITxpo conference held in Orlando, Fla.

"A number of things are funneling together to cause major disruption to your environment," David Cearley, vice president and Gartner fellow, told CIOs and other technology leaders among the more than 10,000 assembled at the Orlando conference.

"Mobile has outweighed impact in terms of disruption. The mobile experience is eclipsing the desktop experience," said Cearley, who cited the large number of mobile device types as a challenge to IT shops that had over the years achieved a level of uniformity via a few browsers and PC types. "You need to prepare for heterogeneity," he told the audience.

Crucially, mobile devices coupled with cloud computing can change that basic architecture of modern corporate computing.

"You need to think about 'cloud-client' architecture, instead of client-server," Cearley said. "The cloud becomes your control point for what lives down in the client. Complex applications won't necessarily work in these mobile [settings]."

This could mean significant changes in skill sets required for enterprise application development. The cloud-client architecture will call for better design skills for front-end input. Development teams will have to make tradeoffs between use of native mobile device OSes and HTML5 Web browser alternatives, according to Cearley. The fact that these devices are brought into the organization by end users acting as consumers also is a factor.

"The user experience is changing. Users have new expectations," he said. For application architects and developers, he said, there are "new design skills required around how applications communicate and work with each other."

The consequences are complex. For example, software development and testing is increasingly not simply about whether software works or not, according to one person on hand at Gartner ITxpo. "We get great pressures to improve the quality of service we provide. It's not just the software, it's how the customer interacts with the software," said Dave Miller, vice president of software quality assurance at FedEx Services.

Cloud computing and mobile apps on the horizon

Shifts in software architecture, such as those seen today in cloud and mobile applications, have precedence, said Miko Matsumura, senior vice president of platform marketing and developer relations at Mateo, Calif.-based mobile services firm Kii Inc. In the past, there have been "impedance mismatches," for example, in the match between object software architecture and relational data architecture, he noted.

The effect of mobile development means conventional architecture is evolving. "What happens is you see the breakdown of the programming model," said Matsumura, a longtime SOA thought leader whose firm markets a type of mobile back end as a service offering. "Now we have important new distinctions. A whole class of developers treat mobile from the perspective of cloud."

"Now, if you are a mobile developer, you don't need to think about the cloud differently than you think of your mobile client," he said. With "client cloud," he continued, "it's not a different programming model, programming language or programming platform." That is a different situation than we find in most development shops today, where Java or .NET teams and JavaScript teams work with different models, languages and platforms.

The changes in application development and application lifecycles are telling, according to Gartner's Jim Duggan, vice president for research. "Mobile devices and cloud -- a lot of these things challenge conventional development," he said. He said Gartner estimates that by 2015 mobile applications will outnumber those for static deployment by four to one. This will mean that IT will have to spend more on training of developers, and will likely do more outsourcing as well.

"Deciding which [are the skills that] you need to have internally is going to change the way your shop evolves," he said.

Look out; the chief digital officer is coming

Underlying the tsunami of disruptive consumerist forces Gartner sees is a wholesale digitization of commerce. At the conference Gartner analysts said such digitization of business segments will lead to a day when every budget becomes an IT budget. Firms will begin to create the role of chief digital officer as part of the business unit leadership, Gartner boldly predicted, estimating 25% of organizations will have chief digital officers in place by 2015.

The chief digital officer title may grow, according to Kii's Matsumura. Often today, marketing departments are controlling the mobile and social media agendas within companies, he said.

"We are seeing a convergence point between the human side of the business -- in the form of marketing -- and the 'machine-side,' in the form of IT," he said.

What It Will Take to Sell Cloud to a Skeptical Business

The other week, I covered a report that asserts Fortune 500 CIOs are seeing mainly positive results

from their cloud computing efforts. The CIOs seem happy, but that

doesn’t mean others in the organization share that enthusiasm.

Andi Mann, vice president for strategic solutions at CA Technologies, for one, questions whether CIOs are the right individuals to talk to about the success of cloud computing. And he takes the original Navint Partners report – which was based on qualitative interviews with 20 CIOs – to task.

“Twenty CIOs are happy, so far, with cloud computing. But what about their bosses, their employees, their organization’s partners and their customers?” he asks. “Are they happy?”

In order to understand the business success of cloud computing or any innovative technology, Mann points out, “we need to (a) talk to more than just CIOs, (b) be certain we’re asking all the right questions, and (c) hit a larger sample than just four percent of the Fortune 500.”

CA Technologies did just that, commissioning IDG Research Services to conduct a global study on the state of business innovation. “To be ‘innovative’ means your organization is capitalizing on cutting edge advancements such as cloud computing, big data, enterprise mobility, devops, the IT supply chain, and so on,” he says.

And Mann delivers this thought-provoking news: “After talking to 800 IT and business executives at organizations with more than $250 million in revenue, we didn’t find a horde of happy campers. Yes, some CIOs are happy with the cloud, and that’s a good thing. But here’s the rub. Firstly, CIOs are not the principal drivers of innovation. Users are. Second, our research found business leaders are, in many cases, unhappy with what their CIOs are doing.”

Business executives are not as excited as IT is about cloud computing, either, Mann continues. “Forty percent of IT respondents say their organization plans to invest in cloud computing over the next 12 months, versus 31% of business executives. Also, business executives give IT low marks in enabling and supporting innovation. One in two rate IT poorly in terms of having made sufficient investment in resources and spending on innovation; 48% think IT isn’t moving fast enough; 37% think IT doesn’t have the right skills; and 40% say IT isn’t receptive to new ideas when approached by the business.”

While cloud offers IT leaders the ability to keep the lights on for less, that’s only one dimension to consider, Mann says. “The crucial question to answer is if the IT organization is innovating with the cloud. Savings are one thing. Moving the organization ahead and keeping it technologically competitive is another. “

Mann provides examples of questions the business needs to be asking before a cloud approach is contemplated: “The business wants IT to explore the world of digital technologies and deliver answers. How can you align mobile and consumerization with the business interest? How do you connect with millions of people over social media? How do you find a new market and get into that market in a different geography or a different product area? How do you use technology to smooth out the bumps in an M&A activity?”

CIOs need to help the business understand that the cloud has many other business advantages beyond cost efficiencies, says Mann. “But the only way to capitalize on those advantages is for the executive leadership to allocate budget appropriately. We need to soften the focus on cost cutting, and sharpen the focus on IT empowerment and business alignment.”

To get there, he says, “CIOs need to make a case for greater investment in cloud computing, not simply to cut costs longer term, but to drive innovation and competitive advantage. Can the CIO win additional budget if she could achieve success according to real business KPIs such as return on equity, return on assets, shareholder value, revenue growth, or competitive position? If the CIO could reliably and measurably move the needle on such things, instead of just auditing costs, the ‘happiness gap’ between the business and IT could be closed.”

It’s time to increase our understanding of the broader and deeper impact of cloud on organizations. “We’ve been talking about IT-to-business alignment for generations. Yet we keep surveying the IT guys when we should be surveying the organizations they serve,” says Mann. “I’m concerned that, statistically, a significant percentage of Fortune 500 business executives might not be happy with the cloud. And that, by far, is the more important number for CIOs to measure and improve.”

Andi Mann, vice president for strategic solutions at CA Technologies, for one, questions whether CIOs are the right individuals to talk to about the success of cloud computing. And he takes the original Navint Partners report – which was based on qualitative interviews with 20 CIOs – to task.

“Twenty CIOs are happy, so far, with cloud computing. But what about their bosses, their employees, their organization’s partners and their customers?” he asks. “Are they happy?”

In order to understand the business success of cloud computing or any innovative technology, Mann points out, “we need to (a) talk to more than just CIOs, (b) be certain we’re asking all the right questions, and (c) hit a larger sample than just four percent of the Fortune 500.”

CA Technologies did just that, commissioning IDG Research Services to conduct a global study on the state of business innovation. “To be ‘innovative’ means your organization is capitalizing on cutting edge advancements such as cloud computing, big data, enterprise mobility, devops, the IT supply chain, and so on,” he says.

And Mann delivers this thought-provoking news: “After talking to 800 IT and business executives at organizations with more than $250 million in revenue, we didn’t find a horde of happy campers. Yes, some CIOs are happy with the cloud, and that’s a good thing. But here’s the rub. Firstly, CIOs are not the principal drivers of innovation. Users are. Second, our research found business leaders are, in many cases, unhappy with what their CIOs are doing.”

Business executives are not as excited as IT is about cloud computing, either, Mann continues. “Forty percent of IT respondents say their organization plans to invest in cloud computing over the next 12 months, versus 31% of business executives. Also, business executives give IT low marks in enabling and supporting innovation. One in two rate IT poorly in terms of having made sufficient investment in resources and spending on innovation; 48% think IT isn’t moving fast enough; 37% think IT doesn’t have the right skills; and 40% say IT isn’t receptive to new ideas when approached by the business.”

While cloud offers IT leaders the ability to keep the lights on for less, that’s only one dimension to consider, Mann says. “The crucial question to answer is if the IT organization is innovating with the cloud. Savings are one thing. Moving the organization ahead and keeping it technologically competitive is another. “

Mann provides examples of questions the business needs to be asking before a cloud approach is contemplated: “The business wants IT to explore the world of digital technologies and deliver answers. How can you align mobile and consumerization with the business interest? How do you connect with millions of people over social media? How do you find a new market and get into that market in a different geography or a different product area? How do you use technology to smooth out the bumps in an M&A activity?”

CIOs need to help the business understand that the cloud has many other business advantages beyond cost efficiencies, says Mann. “But the only way to capitalize on those advantages is for the executive leadership to allocate budget appropriately. We need to soften the focus on cost cutting, and sharpen the focus on IT empowerment and business alignment.”

To get there, he says, “CIOs need to make a case for greater investment in cloud computing, not simply to cut costs longer term, but to drive innovation and competitive advantage. Can the CIO win additional budget if she could achieve success according to real business KPIs such as return on equity, return on assets, shareholder value, revenue growth, or competitive position? If the CIO could reliably and measurably move the needle on such things, instead of just auditing costs, the ‘happiness gap’ between the business and IT could be closed.”

It’s time to increase our understanding of the broader and deeper impact of cloud on organizations. “We’ve been talking about IT-to-business alignment for generations. Yet we keep surveying the IT guys when we should be surveying the organizations they serve,” says Mann. “I’m concerned that, statistically, a significant percentage of Fortune 500 business executives might not be happy with the cloud. And that, by far, is the more important number for CIOs to measure and improve.”

Subscribe to:

Posts (Atom)